Nextcloud#

All configurations presented here use docker-compose. Read the Docker instructions first.

Avvertimento

The contents of this page have not been tested for all Nextcloud versions.

Nextcloud on Docker setups#

Some variables are obvious while others needs an explanation

Variable name |

Description |

|---|---|

|

directory containing user files, Nextcloud logs and application data |

|

Elasticsearch data directory |

|

Elasticsearch plugins directory |

|

domain name |

|

directory containing Nextcloud’s HTML code and configurations. You can mount this directory on a SSD |

|

a username of the Nextcloud instance |

|

a password corresponding to |

MariaDB hosted on a Docker container#

Vedi anche

follow the Docker instructions

create the jobs directories. See reference

mkdir -p /home/jobs/scripts/by-user/root/docker/nextcloud cd /home/jobs/scripts/by-user/root/docker/nextcloud

create a

Docker compose file/home/jobs/scripts/by-user/root/docker/nextcloud/docker-compose.yml#version: '2' volumes: nextcloud: db: redis: services: # See # https://help.nextcloud.com/t/mariadb-upgrade-from-10-5-11-to-10-6-causes-internal-server-error/120585 db: hostname: db image: mariadb:10.5.11 restart: always command: --transaction-isolation=READ-COMMITTED --binlog-format=ROW volumes: - ./db:/var/lib/mysql environment: - MYSQL_ROOT_PASSWORD=${DATABASE_ROOT_PASSWORD} - MYSQL_PASSWORD=${DATABASE_PASSWORD} - MYSQL_DATABASE=nextcloud - MYSQL_USER=nextcloud app: image: nextcloud:production restart: always ports: - 4005:80 links: - db - redis volumes: - ${DATA_PATH}:/var/www/html/data - ${HTML_DATA_PATH}:/var/www/html environment: - REDIS_HOST=redis - REDIS_HOST_PORT=6379 - MYSQL_PASSWORD=${DATABASE_PASSWORD} - MYSQL_DATABASE=nextcloud - MYSQL_USER=nextcloud - MYSQL_HOST=db - APACHE_DISABLE_REWRITE_IP=1 - TRUSTED_PROXIES=127.0.0.1 - OVERWRITEHOST=${FQDN} - OVERWRITEPROTOCOL=https - OVERWRITECLIURL=https://${FQDN} redis: image: redis:6.2.5 restart: always

Nota

Replace these variables with appropriate values

DATABASE_ROOT_PASSWORDDATABASE_PASSWORDDATA_PATHHTML_DATA_PATHFQDN

create a

Systemd unit file. See also the Docker compose services section/home/jobs/services/by-user/root/docker-compose.nextcloud.service#[Unit] Requires=docker.service Requires=network-online.target After=docker.service After=network-online.target # Enable these for the "scalable" variant. # Requires=redis.service # Requires=redis-socket.service # After=redis.service # After=redis-socket.service [Service] Type=simple WorkingDirectory=/home/jobs/scripts/by-user/root/docker/nextcloud ExecStart=/usr/bin/docker-compose up --remove-orphans ExecStop=/usr/bin/docker-compose down --remove-orphans Restart=always [Install] WantedBy=multi-user.target

fix the permissions

chmod 700 /home/jobs/scripts/by-user/root/docker/nextcloud chmod 700 -R /home/jobs/services/by-user/root

run the deploy script

modify the reverse proxy port of your webserver configuration with

4005

Migration from MariaDB on a container container to PostgreSQL in host#

check that your PostgreSQL setup is fully configured and running

add an entry in the

pg_hba.conffile/etc/postgresql/13/main/pg_hba.conf#host nextclouddb nextcloud 172.16.0.0/16 scram-sha-256change this setting in

postgresql.conf/etc/postgresql/13/main/postgresql.conf#listen_addresses = '*'

restart Postgres

systemctl restart postgresql.servicestop the Nextcloud container

systemctl stop docker-compose.nextcloud.serviceconvert the database type. You will be prompted for the database password

docker-compose exec --user www-data app php occ db:convert-type --all-apps pgsql ${DATABASE_USER} ${DATABASE_HOST} ${DATABASE_NAME}The Docker container must now comunicate with the PostgreSQL instance running on bare metal. The variables used here refer to the PostgreSQL instance.

use this

Docker compose fileinstead. See also the Docker compose services section/home/jobs/scripts/by-user/root/docker/nextcloud/docker-compose.yml## PostgreSQL in host. version: '2' volumes: nextcloud: redis: services: app: image: nextcloud:production restart: always ports: - 4005:80 links: - redis volumes: - ${DATA_PATH}:/var/www/html/data - ${HTML_DATA_PATH}:/var/www/html environment: - REDIS_HOST=redis - REDIS_HOST_PORT=6379 - POSTGRES_DB=${DATABASE_NAME} - POSTGRES_USER=${DATABASE_USER} - POSTGRES_HOST=${DATABASE_HOST} - POSTGRES_PASSWORD=${DATABASE_PASSWORD} - APACHE_DISABLE_REWRITE_IP=1 - TRUSTED_PROXIES=127.0.0.1 - OVERWRITEHOST=${FQDN} - OVERWRITEPROTOCOL=https - OVERWRITECLIURL=https://${FQDN} redis: image: redis:6.2.5 restart: always hostname: redis volumes: - redis:/var/lib/redis

Nota

Replace

DATABASE_NAME,DATABASE_USER,DATABASE_PASSWORD,DATABASE_HOST,DATA_PATH,HTML_DATA_PATHandFQDNwith appropriate values.DATABASE_HOSTmay be different fromFQDNif PostgreSQL is not reachable through Internet or is running on a different machine.run the deploy script

A more scalable setup#

To have a more serious setup you must separate some of the components. In this example Nextcloud’s FPM image, the NGINX webserver and ClamAV (optional) will be run in Docker. Redis will accessed by Docker containers from the host machine.

Vedi anche

Access host socket from container - socat - IT Playground Blog [6]

follow the Docker instructions

create the jobs directories. See reference

mkdir -p /home/jobs/scripts/by-user/root/docker/nextcloud cd /home/jobs/scripts/by-user/root/docker/nextcloud

create a

Docker compose file/home/jobs/scripts/by-user/root/docker/nextcloud/docker-compose.yml#version: '2' volumes: app: web: services: web: image: nginx:1.23.1 restart: always ports: - 127.0.0.1:4005:80 links: - app volumes: - ./nginx.conf:/etc/nginx/nginx.conf volumes_from: - app networks: - mynetwork app: image: nextcloud:24.0.5-fpm restart: always links: - clamav volumes: - ${DATA_PATH}:/var/www/html/data - ${HTML_DATA_PATH}:/var/www/html - ${PHP_CONFIG_DATA_PATH}:/usr/local/etc/php/conf.d - /var/run/redis.sock:/var/run/redis.sock environment: - TZ=Europe/Rome - REDIS_HOST=/var/run/redis.sock - REDIS_HOST_PORT=0 - POSTGRES_DB=${DATABASE_NAME} - POSTGRES_USER=${DATABASE_USER} - POSTGRES_HOST=${DATABASE_HOST} - POSTGRES_PASSWORD=${DATABASE_PASSWORD} - APACHE_DISABLE_REWRITE_IP=1 - TRUSTED_PROXIES=127.0.0.1 - OVERWRITEHOST=${FQDN} - OVERWRITEPROTOCOL=https - OVERWRITECLIURL=https://${FQDN} - PHP_UPLOAD_LIMIT=8G

Nota

Replace

DATABASE_ROOT_PASSWORD,DATABASE_PASSWORD,DATA_PATH,HTML_DATA_PATH,PHP_CONFIG_DATA_PATHandFQDNwith appropriate values.create a

Systemd unit file. See also the Docker compose services section/home/jobs/services/by-user/root/docker-compose.nextcloud.service#[Unit] Requires=docker.service Requires=network-online.target After=docker.service After=network-online.target # Enable these for the "scalable" variant. # Requires=redis.service # Requires=redis-socket.service # After=redis.service # After=redis-socket.service [Service] Type=simple WorkingDirectory=/home/jobs/scripts/by-user/root/docker/nextcloud ExecStart=/usr/bin/docker-compose up --remove-orphans ExecStop=/usr/bin/docker-compose down --remove-orphans Restart=always [Install] WantedBy=multi-user.target

follow the Redis instructions

create a copy of the Redis socket with specific permissions. Use this

Systemd service./home/jobs/services/by-user/root/redis-socket.service#[Unit] Requires=network-online.target Requires=redis.service After=network-online.target After=redis.service [Service] Type=simple ExecStart=/usr/bin/socat UNIX-LISTEN:/var/run/redis.sock,user=33,group=33,mode=0660,fork UNIX-CLIENT:/var/run/redis/redis-server.sock Restart=always [Install] WantedBy=multi-user.target

The Redis docket must have the same user id (in this case

33) of the nextcloud files in the Docker image. To get the ids run this commanddocker-compose exec -T --user www-data app idYou should get something like

uid=33(www-data) gid=33(www-data) groups=33(www-data)

copy the php data from the image to the

PHP_CONFIG_DATA_PATH. First of all comment thePHP_CONFIG_DATA_PATHvolume from the docker-compose file then rundocker-compose up --remove orphans docker cp $(docker container ls | grep nextcloud:24.0.5-fpm | awk '{print $1}'):/usr/local/etc/php/conf.d "${PHP_CONFIG_DATA_PATH}" docker-compose down

use this

NGINX configuration fileas example./home/jobs/scripts/by-user/root/docker/nextcloud/nginx.conf#worker_processes auto; error_log /var/log/nginx/error.log warn; pid /var/run/nginx.pid; events { worker_connections 1024; } http { include /etc/nginx/mime.types; default_type application/octet-stream; log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"'; access_log /var/log/nginx/access.log main; sendfile on; #tcp_nopush on; # Prevent nginx HTTP Server Detection server_tokens off; keepalive_timeout 65; #gzip on; upstream php-handler { server app:9000; } server { server_name ${FQDN} listen 80; # HSTS settings # WARNING: Only add the preload option once you read about # the consequences in https://hstspreload.org/. This option # will add the domain to a hardcoded list that is shipped # in all major browsers and getting removed from this list # could take several months. add_header Strict-Transport-Security "max-age=15768000; includeSubDomains; preload;" always; # set max upload size client_max_body_size 512M; fastcgi_buffers 64 4K; # Enable gzip but do not remove ETag headers gzip on; gzip_vary on; gzip_comp_level 4; gzip_min_length 256; gzip_proxied expired no-cache no-store private no_last_modified no_etag auth; gzip_types application/atom+xml application/javascript application/json application/ld+json application/manifest+json application/rss+xml application/vnd.geo+json application/vnd.ms-fontobject application/x-font-ttf application/x-web-app-manifest+json application/xhtml+xml application/xml font/opentype image/bmp image/svg+xml image/x-icon text/cache-manifest text/css text/plain text/vcard text/vnd.rim.location.xloc text/vtt text/x-component text/x-cross-domain-policy; # Pagespeed is not supported by Nextcloud, so if your server is built # with the `ngx_pagespeed` module, uncomment this line to disable it. #pagespeed off; # HTTP response headers borrowed from Nextcloud `.htaccess` add_header Referrer-Policy "no-referrer" always; add_header X-Content-Type-Options "nosniff" always; add_header X-Download-Options "noopen" always; add_header X-Frame-Options "SAMEORIGIN" always; add_header X-Permitted-Cross-Domain-Policies "none" always; add_header X-Robots-Tag "none" always; add_header X-XSS-Protection "1; mode=block" always; # Remove X-Powered-By, which is an information leak fastcgi_hide_header X-Powered-By; # Path to the root of your installation root /var/www/html; # Specify how to handle directories -- specifying `/index.php$request_uri` # here as the fallback means that Nginx always exhibits the desired behaviour # when a client requests a path that corresponds to a directory that exists # on the server. In particular, if that directory contains an index.php file, # that file is correctly served; if it doesn't, then the request is passed to # the front-end controller. This consistent behaviour means that we don't need # to specify custom rules for certain paths (e.g. images and other assets, # `/updater`, `/ocm-provider`, `/ocs-provider`), and thus # `try_files $uri $uri/ /index.php$request_uri` # always provides the desired behaviour. index index.php index.html /index.php$request_uri; # Rule borrowed from `.htaccess` to handle Microsoft DAV clients location = / { if ( $http_user_agent ~ ^DavClnt ) { return 302 /remote.php/webdav/$is_args$args; } } location = /robots.txt { allow all; log_not_found off; access_log off; } # Make a regex exception for `/.well-known` so that clients can still # access it despite the existence of the regex rule # `location ~ /(\.|autotest|...)` which would otherwise handle requests # for `/.well-known`. location ^~ /.well-known { # The rules in this block are an adaptation of the rules # in `.htaccess` that concern `/.well-known`. location = /.well-known/carddav { return 301 https://${FQDN}/remote.php/dav/; } location = /.well-known/caldav { return 301 https://${FQDN}/remote.php/dav/; } location = /.well-known/webfinger { return 301 https://${FQDN}/index.php/.well-known/webfinger; } location = /.well-known/nodeinfo { return 301 https://${FQDN}/index.php/.well-known/nodeinfo; } location /.well-known/acme-challenge { try_files $uri $uri/ =404; } location /.well-known/pki-validation { try_files $uri $uri/ =404; } # Let Nextcloud's API for `/.well-known` URIs handle all other # requests by passing them to the front-end controller. return 301 /index.php$request_uri; } # Rules borrowed from `.htaccess` to hide certain paths from clients location ~ ^/(?:build|tests|config|lib|3rdparty|templates|data)(?:$|/) { return 404; } location ~ ^/(?:\.|autotest|occ|issue|indie|db_|console) { return 404; } # Ensure this block, which passes PHP files to the PHP process, is above the blocks # which handle static assets (as seen below). If this block is not declared first, # then Nginx will encounter an infinite rewriting loop when it prepends `/index.php` # to the URI, resulting in a HTTP 500 error response. location ~ \.php(?:$|/) { # Required for legacy support rewrite ^/(?!index|remote|public|cron|core\/ajax\/update|status|ocs\/v[12]|updater\/.+|oc[ms]-provider\/.+|.+\/richdocumentscode\/proxy) /index.php$request_uri; fastcgi_split_path_info ^(.+?\.php)(/.*)$; set $path_info $fastcgi_path_info; try_files $fastcgi_script_name =404; include fastcgi_params; fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name; fastcgi_param PATH_INFO $path_info; #fastcgi_param HTTPS on; fastcgi_param modHeadersAvailable true; # Avoid sending the security headers twice fastcgi_param front_controller_active true; # Enable pretty urls fastcgi_pass php-handler; fastcgi_intercept_errors on; fastcgi_request_buffering off; } location ~ \.(?:css|js|svg|gif)$ { try_files $uri /index.php$request_uri; expires 6M; # Cache-Control policy borrowed from `.htaccess` access_log off; # Optional: Don't log access to assets } location ~ \.woff2?$ { try_files $uri /index.php$request_uri; expires 7d; # Cache-Control policy borrowed from `.htaccess` access_log off; # Optional: Don't log access to assets } # Rule borrowed from `.htaccess` location /remote { return 301 /remote.php$request_uri; } location / { try_files $uri $uri/ /index.php$request_uri; } } }

Nota

Replace

FQDNwith appropriate value.edit the redis configuration in the PHP config (

${HTML_DATA_PATH}/config/config.php) like this# [ ... ] 'memcache.distributed' => '\\OC\\Memcache\\Redis', 'memcache.locking' => '\\OC\\Memcache\\Redis', 'redis' => array ( 'host' => '/var/run/redis.sock', 'password' => '', ), # [ ... ]

run the deploy script

modify the reverse proxy port of your webserver configuration with

4005connect to your Nextcloud instance as admin and configure the antivirus app from the settings. Go to Settings -> Security -> Antivirus for Files

Mode:

ClamAV DaemonHost:

clamavPort:

3310When infected files are found during a background scan:

Delete file

Video#

Apache configuration#

Clearnet#

serve the files via HTTP by creating a new

Apache virtual host. ReplaceFQDNwith the appropriate domain and include this file from the Apache configuration/etc/apache2/nextcloud.apache.conf############### # Nextcloud # ############## <IfModule mod_ssl.c> <VirtualHost *:80> ServerName ${FQDN} # Force https. UseCanonicalName on RewriteEngine on RewriteCond %{SERVER_NAME} =${FQDN} # Ignore rewrite rules for 127.0.0.1 RewriteCond %{HTTP_HOST} !=127.0.0.1 RewriteCond %{REMOTE_ADDR} !=127.0.0.1 RewriteRule ^ https://%{SERVER_NAME}%{REQUEST_URI} [END,NE,R=permanent] </VirtualHost> </IfModule> <IfModule mod_ssl.c> <VirtualHost *:443> UseCanonicalName on Keepalive On ServerName ${FQDN} SSLCompression off # HSTS <IfModule mod_headers.c> Header always set Strict-Transport-Security "max-age=15552000; includeSubDomains" </IfModule> RequestHeader set X-Forwarded-Proto "https" RequestHeader set X-Forwarded-For "app" RequestHeader set X-Forwarded-Port "443" RequestHeader set X-Forwarded-Host "${FQDN}" ProxyPass / http://127.0.0.1:4005/ ProxyPassReverse / http://127.0.0.1:4005/ Include /etc/letsencrypt/options-ssl-apache.conf SSLCertificateFile /etc/letsencrypt/live/${FQDN}/fullchain.pem SSLCertificateKeyFile /etc/letsencrypt/live/${FQDN}/privkey.pem </VirtualHost> </IfModule>

TOR#

Vedi anche

install TOR

apt-get install torcreate a new onion service. See the

/etc/tor/torrcfilecreate a new variable in the main Apache configuration file, usually

/etc/apache2/apache2.confDefine NEXTCLOUD_DOMAIN your.FQDN.domain.org

similarly to the clearnet version, create a new

Apache virtual host. Replace the TOR service URL with the one you want to use/etc/apache2/nextcloud_tor.apache.conf## SPDX-FileCopyrightText: 2023 Franco Masotti # # SPDX-License-Identifier: MIT ############# # Nextcloud # ############# # Required Listen 8765 <IfModule mod_ssl.c> <VirtualHost 127.0.0.1:8765> UseCanonicalName on ProxyPreserveHost On ProxyRequests off AllowEncodedSlashes NoDecode Keepalive On RewriteEngine on ServerName z7lsmsostnhiadxeuc6f5lto5jssa3j4c3jxaol6tvjavsxarf5agyyd.onion SSLCompression off Header edit Location ^https://${NEXTCLOUD_DOMAIN}(.*)$ http://z7lsmsostnhiadxeuc6f5lto5jssa3j4c3jxaol6tvjavsxarf5agyyd.onion$1 RequestHeader set X-Forwarded-Proto "http" # Distinguish normal traffic from tor's.. RequestHeader set X-Forwarded-For "tor" RequestHeader set X-Forwarded-Port "80" RequestHeader set X-Forwarded-Host "z7lsmsostnhiadxeuc6f5lto5jssa3j4c3jxaol6tvjavsxarf5agyyd.onion" ProxyPass / http://127.0.0.1:4005/ keepalive=On ProxyPassReverse / http://127.0.0.1:4005/ keepalive=On </VirtualHost> </IfModule>

include the virtual host file from the mail Apache configuration file:

Include /etc/apache2/nextcloud_tor.conf

Apps#

Tips and configurations for some Nextcloud apps

Collabora (cloud office suite)#

To make Collabora interact with Nextcloud without errors you need to deploy a new virtual server in Apache. Collabora must work with the same security protocol as the Nextcloud instance. In all these examples you see that Nextcloud is deployed in SSL mode so Collabora must be in SSL mode as well.

Collabora is deployed as a Docker container dependency of Nextcloud.

Vedi anche

Installation example with Docker — Nextcloud latest Administration Manual latest documentation [15]

Nextcloud & Collabora docker installation. Error: Collabora Online should use the same protocol as the server installation - 🚧 Installation - Nextcloud community [16]

Socket Error when accessing Collabora - ℹ️ Support / 📄 Collabora - Nextcloud community [17]

Can’t connect to OnlyOffice document server after update to NC19 - ℹ️ Support - Nextcloud community [30]

follow the Docker instructions

edit the Nextcloud docker-compose file by adding the

Collabora service/home/jobs/scripts/by-user/root/docker/nextcloud/docker-compose.yml#restart: always ports: - 127.0.0.1:9980:9980 environment: - domain=${NEXTCLOUD_FQDN} - server_name=${COLLABORA_FQDN} - dictionaries=de en es it - extra_params=--o:ssl.enable=true --o:ssl.termination=true cap_add: - MKNOD # WARNING: SYS_ADMIN (CAP_SYS_ADMIN): man 7 capabilities # This is very privileged! # See also # https://docs.docker.com/compose/compose-file/compose-file-v3/#cap> # https://github.com/CollaboraOnline/online/issues/779 # - SYS_ADMIN

Nota

Replace

NEXTCLOUD_FQDNandCOLLABORA_FQDNwith appropriate values.Add

collaborato thelinksanddepends_onsections of the Nextcloud service.serve Collabora via HTTPS by creating a new

Apache virtual host. ReplaceCOLLABORA_FQDNwith the appropriate domain and include this file from the Apache configuration/etc/apache2/collabora.apache.conf############## # Collabora # ############# <IfModule mod_ssl.c> <VirtualHost *:80> ServerName ${COLLABORA_FQDN} # Force https. UseCanonicalName on RewriteEngine on RewriteCond %{SERVER_NAME} =${COLLABORA_FQDN} # Ignore rewrite rules for 127.0.0.1 RewriteCond %{HTTP_HOST} !=127.0.0.1 RewriteCond %{REMOTE_ADDR} !=127.0.0.1 RewriteRule ^ https://%{SERVER_NAME}%{REQUEST_URI} [END,NE,R=permanent] </VirtualHost> </IfModule> <IfModule mod_ssl.c> <VirtualHost *:443> ServerName ${COLLABORA_FQDN} SSLEngine on AllowEncodedSlashes NoDecode # Container uses a unique non-signed certificate SSLProxyEngine On SSLProxyVerify None SSLProxyCheckPeerCN Off SSLProxyCheckPeerName Off ProxyPreserveHost On # static html, js, images, etc. served from coolwsd # browser is the client part of LibreOffice Online ProxyPass /browser https://127.0.0.1:9980/browser retry=0 ProxyPassReverse /browser https://127.0.0.1:9980/browser # WOPI discovery URL ProxyPass /hosting/discovery https://127.0.0.1:9980/hosting/discovery retry=0 ProxyPassReverse /hosting/discovery https://127.0.0.1:9980/hosting/discovery # Main websocket ProxyPassMatch "/cool/(.*)/ws$" wss://127.0.0.1:9980/cool/$1/ws nocanon # Admin Console websocket ProxyPass /cool/adminws wss://127.0.0.1:9980/cool/adminws # Download as, Fullscreen presentation and Image upload operations ProxyPass /cool https://127.0.0.1:9980/cool ProxyPassReverse /cool https://127.0.0.1:9980/cool # Compatibility with integrations that use the /lool/convert-to endpoint ProxyPass /lool https://127.0.0.1:9980/cool ProxyPassReverse /lool https://127.0.0.1:9980/cool # Capabilities ProxyPass /hosting/capabilities https://127.0.0.1:9980/hosting/capabilities retry=0 ProxyPassReverse /hosting/capabilities https://127.0.0.1:9980/hosting/capabilities Include /etc/letsencrypt/options-ssl-apache.conf SSLCertificateFile /etc/letsencrypt/live/${COLLABORA_FQDN}/fullchain.pem SSLCertificateKeyFile /etc/letsencrypt/live/${COLLABORA_FQDN}/privkey.pem </VirtualHost> </IfModule>

restart the Nextcloud container and its dependencies with docker-compose

restart Apache

systemctl restart apache2install the Nexcloud Office app from the store. Go to the add apps admin menu, then to the

Searchsectionconfigure the search from the Nextcloud admin settings. Go to the

Officetab and use these settingsUse your own serverURL (and Port) of Collabora Online-server:${COLLABORA_FQDN}

click on

Savecreate a new office document or open an existing one to check if everything is working

Nextcloud calendar to PDF#

Export a Nextcloud calendar to a PDF file. This is not an app you install from the Nextcloud store but an external script which interacts with the Nextcloud API

![strict digraph content {

rankdir=LR;

nc [label="Nextcloud (HTTP API)", shape="rectangle"];

ical [label="iCalendar", shape="rectangle"];

html [label="HTML", shape="rectangle"];

pdf [label="PDF", shape="rectangle"];

nc -> ical [label="requests"];

ical -> html [label="ical2html"];

html -> pdf [label="Weasyprint"];

}](../../_images/graphviz-1e0b249961b5ac76ac70bca9ac00205ee13287c7.png)

Vedi anche

Index of /Tools/Ical2html [11]

install the dependencies

apt-get install python3-venv ical2htmlcreate the jobs directories

mkdir -p /home/jobs/scripts/by-user/myuser/nextcloud_calendar_to_pdf cd /home/jobs/scripts/by-user/myuser/nextcloud_calendar_to_pdf

create a new Nextcloud user specifically for this purpose and share the calendar with it

connect to your Nextcloud instance using the new user and go to the calendar app

get the calendar name by opening the calendar options an click on

Copy private linkcreate the

scriptincludes/home/jobs/scripts/by-user/myuser/nextcloud_calendar_to_pdf/nextcloud_calendar_to_pdf.py##!/usr/bin/env python3 # # nextcloud_calendar_to_pdf.py # # Copyright (C) 2022-2023 Franco Masotti (franco \D\o\T masotti {-A-T-} tutanota \D\o\T com) # # This program is free software: you can redistribute it and/or modify # it under the terms of the GNU General Public License as published by # the Free Software Foundation, either version 3 of the License, or # (at your option) any later version. # # This program is distributed in the hope that it will be useful, # but WITHOUT ANY WARRANTY; without even the implied warranty of # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the # GNU General Public License for more details. # # You should have received a copy of the GNU General Public License # along with this program. If not, see <https://www.gnu.org/licenses/>. # # See more copyrights and licenses below. import calendar import datetime import io import pathlib import shlex import subprocess import sys import urllib.parse import urllib.request import yaml from weasyprint import HTML if __name__ == '__main__': configuration_file = sys.argv[1] config = yaml.load(open(configuration_file), Loader=yaml.SafeLoader) input_year: str = input('Year (empty for current) : ').zfill(4) input_month: str = input('Month (empty for current): ').zfill(2) if input_year == '0000': input_year = str(datetime.datetime.now().year).zfill(4) if input_month == '00': input_month = str(datetime.datetime.now().month).zfill(2) input('Are you sure, year=' + input_year + ' month=' + input_month + '? ') # Day is always fixed at 1. input_day: str = '01' input_date: str = input_year + input_month + input_day calendar_error_message: str = 'This is the WebDAV interface. It can only be accessed by WebDAV clients such as the Nextcloud desktop sync client.' try: # Format yyyymmdd ttime = datetime.datetime.strptime(input_date, '%Y%m%d') month_range: tuple = calendar.monthrange(ttime.year, ttime.month) # Pan days = current_month.days - 1 pan_days: str = str(month_range[1] - 1) except ValueError: print('date parse error') sys.exit(1) calendar_api_uri: str = ('https://' + config['nextcloud']['host'] + '/remote.php/dav/calendars/' + config['nextcloud']['user'] + '/' + config['nextcloud']['calendar_name'] + '?export') # Check if the computed string is a valid URL. try: nc_calendar_string = urllib.parse.urlparse(calendar_api_uri) if (nc_calendar_string.scheme == '' or nc_calendar_string.netloc == ''): raise ValueError except ValueError: print('URL parse error') sys.exit(1) # Get the calendar using credentials. # Send credentials immediately, do not wait for the 401 error. password_manager = urllib.request.HTTPPasswordMgrWithPriorAuth() password_manager.add_password(None, calendar_api_uri, config['nextcloud']['user'], config['nextcloud']['password'], is_authenticated=True) handler = urllib.request.HTTPBasicAuthHandler(password_manager) opener = urllib.request.build_opener(handler) urllib.request.install_opener(opener) try: with urllib.request.urlopen(calendar_api_uri) as response: nc_calendar: io.BytesIO = response.read() if nc_calendar.decode('UTF-8') == calendar_error_message: raise ValueError except (urllib.error.URLError, ValueError) as e: print(e) print('error getting element from the Nextcloud instance at ' + calendar_api_uri) sys.exit(1) # Transform the ical file to HTML. command: str = ('ical2html --footer=' + config['ical2html']['footer'] + ' --timezone=' + config['ical2html']['timezone'] + ' ' + input_date + ' P' + pan_days + 'D ' + config['ical2html']['options']) command: str = shlex.split(command) try: process = subprocess.run(command, input=nc_calendar, check=True, capture_output=True) except subprocess.CalledProcessError: print('ical2html error') sys.exit(1) # Transform the HTML file to PDF. try: output_file = str( pathlib.Path( config['output_basedir'], config['nextcloud']['calendar_name'] + '_' + input_date + '_dayrange_' + str(month_range[1]))) html = HTML(string=process.stdout) final_file = output_file + '.pdf' html.write_pdf(final_file) print('output file = ' + final_file) except (TypeError, ValueError): print('weasyprint error') sys.exit(1)

create the

configuration/home/jobs/scripts/by-user/myuser/nextcloud_calendar_to_pdf/nextcloud_calendar_to_pdf.yaml#nextcloud: host: 'my.nextcloud.com' user: 'calendar-user' password: 'my password' # Must already be slugged. calendar_name: 'my-personal-calendar_shared_by_someotheruser' ical2html: # Pass options directly. options: '--monday' timezone: 'Europe/Rome' footer: '"A generic footer message"' output_basedir: '/home/myuser/calendar_outputs'

create a

launcherincludes/home/jobs/scripts/by-user/myuser/nextcloud_calendar_to_pdf_laucher.sh##!/usr/bin/env bash # # nextcloud_calendar_to_pdf_laucher.sh # # Copyright (C) 2022-2023 Franco Masotti (franco \D\o\T masotti {-A-T-} tutanota \D\o\T com) # # This program is free software: you can redistribute it and/or modify # it under the terms of the GNU General Public License as published by # the Free Software Foundation, either version 3 of the License, or # (at your option) any later version. # # This program is distributed in the hope that it will be useful, # but WITHOUT ANY WARRANTY; without even the implied warranty of # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the # GNU General Public License for more details. # # You should have received a copy of the GNU General Public License # along with this program. If not, see <https://www.gnu.org/licenses/>. # #!/usr/bin/env bash set -euo pipefail pushd /home/jobs/scripts/by-user/myuser/nextcloud_calendar_to_pdf # Create the virtual environment. if [ ! -d '.venv' ]; then python3 -m venv .venv . .venv/bin/activate pip3 install --requirement requirements.txt deactivate fi . .venv/bin/activate && ./nextcloud_calendar_to_pdf.py ./nextcloud_calendar_to_pdf.yaml && deactivate popd

create the

requirements.txtfileincludes/home/jobs/scripts/by-user/myuser/nextcloud_calendar_to_pdf/requirements.txt#PyYAML>=6,<7 WeasyPrint>=52,<53

fix the permissions

chmod 700 /home/jobs/scripts/by-user/myuser chmod 700 -R /home/jobs/services/by-user/myuser

Run the script

/home/jobs/scripts/by-user/myuser/nextcloud_calendar_to_pdf/nextcloud_calendar_to_pdf.sh

Nextcloud news to epub#

install the dependencies

apt-get install pandoccreate the

scriptincludes/home/jobs/scripts/by-user/myuser/nextcloud_news_to_epub/nc_news_to_epub.py##!/usr/bin/env python3 # # SPDX-FileCopyrightText: 2023 Franco Masotti # # SPDX-License-Identifier: MIT import datetime import json import pathlib import shlex import shutil import sys from urllib.parse import urljoin import fpyutils import httpx import nh3 import platformdirs import yaml def get_sources(base_url: str, user: str, password: str) -> dict: sources_endpoint: str = urljoin(base_url, 'feeds') try: r = httpx.get(sources_endpoint, auth=(user, password)) r.raise_for_status() except httpx.HTTPError as exc: print(f'HTTP Exception for {exc.request.url} - {exc}') sys.exit(1) return json.loads(r.text) def get_feeds(base_url, user: str, password: str, feed_id: int, number_of_feeds_per_source: int): latest_feeds_from_source_endpoint: str = urljoin( base_url, 'items?id=' + str(feed_id) + '&batchSize=' + str(number_of_feeds_per_source) + '&type=0&getRead=false&offset=0') try: r = httpx.get(latest_feeds_from_source_endpoint, auth=(user, password)) r.raise_for_status() except httpx.HTTPError as exc: print(f'HTTP Exception for {exc.request.url} - {exc}') sys.exit(1) return json.loads(r.text) def build_html(feed_struct: dict) -> str: url: str = feed_struct['url'] title: str = feed_struct['title'] author: str = feed_struct['author'] pub_date: int = feed_struct['pubDate'] if feed_struct['title'] is None: title = '' if feed_struct['url'] is None: url = '' if feed_struct['author'] is None: author = '' if feed_struct['pubDate'] is None: pub_date = '' else: pub_date = datetime.datetime.fromtimestamp( pub_date, datetime.timezone.utc).strftime('%Y-%m-%d %H:%M:%S') return ''.join([ '<html><body><h1>', title, '</h1><h2>', url, '</h2><h2>', author, '</h2><h2>', pub_date, '</h2>', feed_struct['body'], '</body></html>' ]) def post_actions(base_url: str, user: str, password: str, feeds_ids_read: list, action: str): try: r = httpx.post(urljoin(base_url, 'items/' + action + '/multiple'), json={'itemIds': feeds_ids_read}, auth=(user, password)) r.raise_for_status() except httpx.HTTPError as exc: print(f'HTTP Exception for {exc.request.url} - {exc}') sys.exit(1) if __name__ == '__main__': def main(): configuration_file = shlex.quote(sys.argv[1]) config = yaml.load(open(configuration_file), Loader=yaml.SafeLoader) feeds_ids_read: list = list() base_url: str = urljoin('https://' + config['server']['domain'], '/index.php/apps/news/api/v1-3/') # Remove cache dir. shutil.rmtree(str( pathlib.Path(platformdirs.user_cache_dir(), 'ncnews2epub')), ignore_errors=True) # Create cache and output dirs. pathlib.Path(platformdirs.user_cache_dir(), 'ncnews2epub').mkdir(mode=0o700, parents=True, exist_ok=True) pathlib.Path(config['files']['output_directory']).mkdir(mode=0o700, parents=True, exist_ok=True) sources: dict = get_sources(base_url, config['server']['user'], config['server']['password']) for s in sources['feeds']: feeds: dict = get_feeds( base_url, config['server']['user'], config['server']['password'], s['id'], config['feeds']['number_of_feeds_per_source']) for feed in feeds['items']: # Build and sanitize HTML. html: str = nh3.clean(build_html(feed)) feeds_ids_read.append(feed['id']) html_file_name: str = feed['guidHash'] + '.html' html_file: str = str( pathlib.Path(platformdirs.user_cache_dir(), 'ncnews2epub', html_file_name)) with open(html_file, 'w') as f: f.write(html) # Get all '.html' files in the cache directory html_files: str = shlex.join([ str(f) for f in pathlib.Path(platformdirs.user_cache_dir(), 'ncnews2epub').iterdir() if f.is_file() and f.suffix == '.html' ]) formatted_date: str = datetime.datetime.now( datetime.timezone.utc).strftime('%Y-%m-%d-%H-%M-%S') epub_filename: str = ''.join( ['nextcloud_news_export_', formatted_date, '.epub']) epub_full_path: str = str( pathlib.Path(config['files']['output_directory'], epub_filename)) pandoc_command: str = ''.join([ shlex.quote(config['binaries']['pandoc']), ' --from=html --metadata title="', str(pathlib.Path(epub_filename).stem), '" --to=epub ', html_files, ' -o ', epub_full_path ]) fpyutils.shell.execute_command_live_output(pandoc_command) if config['feeds']['mark_read']: post_actions( base_url, config['server']['user'], config['server']['password'], feeds_ids_read, 'read', ) if config['feeds']['mark_starred']: post_actions( base_url, config['server']['user'], config['server']['password'], feeds_ids_read, 'star', ) main()

create the

configurationincludes/home/jobs/scripts/by-user/myuser/nextcloud_news_to_epub/nc_news_to_epub.yaml## # SPDX-FileCopyrightText: 2023 Franco Masotti # # SPDX-License-Identifier: MIT # server: domain: 'my.nextcloud.instance' user: 'user' password: 'generate-app-pwd-in-nc' feeds: number_of_feeds_per_source: 10 mark_read: true mark_starred: false binaries: pandoc: '/usr/bin/pandoc' files: output_directory: '/home/myuser/Documents'

create the

Makefileincludes/home/jobs/scripts/by-user/myuser/nextcloud_news_to_epub/Makefile## # SPDX-FileCopyrightText: 2023 Franco Masotti # # SPDX-License-Identifier: MIT # # See # https://docs.python.org/3/library/venv.html#how-venvs-work export VENV_CMD=. .venv_nc_news_to_epub/bin/activate export VENV_DIR=.venv_nc_news_to_epub default: install-dev install-dev: python3 -m venv $(VENV_DIR) $(VENV_CMD) \ && pip install --requirement requirements-freeze.txt \ && deactivate regenerate-freeze: uninstall-dev python3 -m venv $(VENV_DIR) $(VENV_CMD) \ && pip install --requirement requirements.txt --requirement requirements-dev.txt \ && pip freeze --local > requirements-freeze.txt \ && deactivate uninstall-dev: rm -rf $(VENV_DIR) run: python3 -m venv $(VENV_DIR) $(VENV_CMD) \ && python3 -m nc_news_to_epub nc_news_to_epub.yaml .PHONY: default install-dev regenerate-freeze uninstall-dev

create the

requirements.txtandrequirements-dev.txtfilesincludes/home/jobs/scripts/by-user/myuser/nextcloud_news_to_epub/requirements.txt#includes/home/jobs/scripts/by-user/myuser/nextcloud_news_to_epub/requirements-dev.txt#fpyutils>=3,<4 httpx>=0.25,<1 nh3>=0.2,<0.3 platformdirs>=3,<4 PyYAML>=6.0.1<7

fix the permissions

chmod 700 /home/jobs/scripts/by-user/myuser chmod 700 -R /home/jobs/services/by-user/myuser

install the virtual environment

make regenerate-freeze make install-dev

Run the script

make run

Full text search with Elasticsearch#

These apps enable you to search text through all files (no OCR).

Vedi anche

Docker-compose fails with ingest-attachment - Elastic Stack / Elasticsearch - Discuss the Elastic Stack [7]

How to Run Elasticsearch 8 on Docker for Local Development | by Lynn Kwong | Level Up Coding [8]

ingest-attachment and node alive problem · Issue #42 · nextcloud/fulltextsearch_elasticsearch · GitHub [9]

elasticsearch - Recommended RAM ratios for ELK with docker-compose - Stack Overflow [10]

Plugin was built for Elasticsearch version 7.7.0 but version 7.7.1 is runnin · Issue #10 · opendatasoft/elasticsearch-aggregation-geoclustering · GitHub [33]

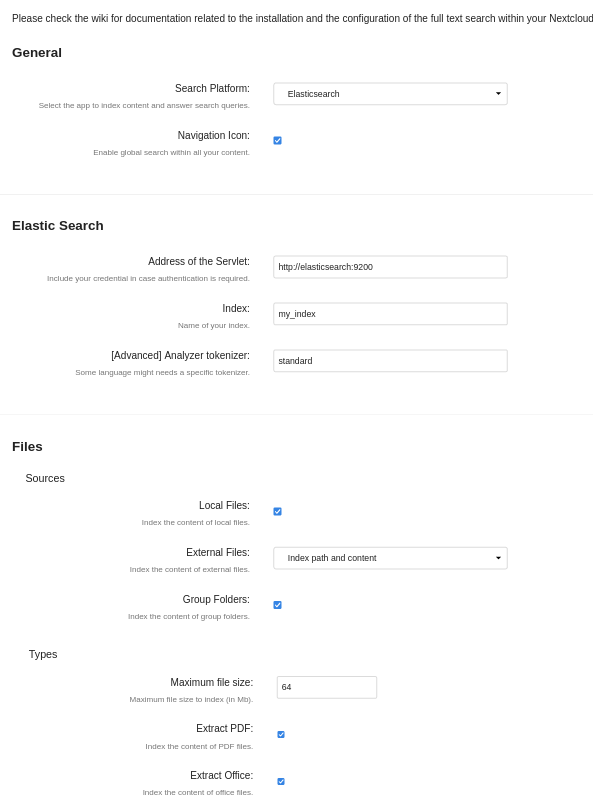

install these three apps in Nextcloud. Go to the add apps admin menu, then to the

Searchsectionconfigure the search from the Nextcloud admin settings. Go to the

Full text searchtab and use these settings:

Nextcloud administration search settings#

add this content to the docker compose file

/home/jobs/scripts/by-user/root/docker/nextcloud/docker-compose.yml## PostgreSQL in host with elasticsearch. version: '2' volumes: nextcloud: redis: services: app: image: nextcloud:production restart: always ports: - 4005:80 links: - redis - elasticsearch # Enable this if you use Collabora # - collabora depends_on: - redis - elasticsearch # Enable this if you use Collabora # - collabora volumes: - ${DATA_PATH}:/var/www/html/data - ${HTML_DATA_PATH}:/var/www/html environment: - REDIS_HOST=redis - REDIS_HOST_PORT=6379 - POSTGRES_DB=${DATABASE_NAME} - POSTGRES_USER=${DATABASE_USER} - POSTGRES_HOST=${DATABASE_HOST} - POSTGRES_PASSWORD=${DATABASE_PASSWORD} - APACHE_DISABLE_REWRITE_IP=1 - TRUSTED_PROXIES=127.0.0.1 - OVERWRITEHOST=${FQDN} - OVERWRITEPROTOCOL=https - OVERWRITECLIURL=https://${FQDN} elasticsearch: image: elasticsearch:7.17.7 environment: - xpack.security.enabled=false - "discovery.type=single-node" hostname: elasticsearch volumes: # NOTE # enable the data volume when insructed! # NOTE # # - ${ES_DATA_PATH}:/usr/share/elasticsearch/data - ${ES_PLUGINS_PATH}:/usr/share/elasticsearch/plugins ports: - 9200:9200 # Very important: elastic search will use all memory available # so you must set an appropriate value here. mem_limit: 16g redis: image: redis:6.2.5 restart: always hostname: redis volumes: - redis:/var/lib/redis collabora: image: collabora/code:23.05.3.1.1

Nota

Replace

DATABASE_NAME,DATABASE_USER,DATABASE_PASSWORD,DATABASE_HOST,DATA_PATH,HTML_DATA_PATH,FQDN,ES_DATA_PATHandES_PLUGINS_PATHwith appropriate values.Importante

At the moment only version 8 of Elasticsearch is supported as stated in the GitHub development page:

As of [Nextcloud] version 26.0.0 this app is only compatible with Elasticsearch 8

go to the Nextcloud docker directory and run the app

pushd /home/jobs/scripts/by-user/root/docker/nextcloud docker-compose up --remove-orphans

install the attachment plugin

/usr/bin/docker-compose exec -T elasticsearch /usr/share/elasticsearch/bin/elasticsearch-plugin install ingest-attachment

check the user id and group id of the data directory in the elasticsearch container

/usr/bin/docker-compose exec -T elasticsearch id elasticsearch /usr/bin/docker-compose exec -T elasticsearch ls -ld /usr/share/elasticsearch/data

stop the app

docker-compose downchange the ownership of the docker volume to the ones resulting from the previous command. In my case the owner user is

1000while the owner group isrootchown 1000:root esdata

enable the commented volume,

ES_DATA_PATH, from the docker-compose filecreate a

Systemd unit file. See also the Docker compose services section/home/jobs/services/by-user/root/docker-compose.nextcloud.service#[Unit] Requires=docker.service Requires=network-online.target After=docker.service After=network-online.target # Enable these for the "scalable" variant. # Requires=redis.service # Requires=redis-socket.service # After=redis.service # After=redis-socket.service [Service] Type=simple WorkingDirectory=/home/jobs/scripts/by-user/root/docker/nextcloud ExecStart=/usr/bin/docker-compose up --remove-orphans ExecStop=/usr/bin/docker-compose down --remove-orphans Restart=always [Install] WantedBy=multi-user.target

run the deploy script

test if the interaction between the Nextcloud container and Elasticsearch is working. The last command does the actual indexing and it may take a long time to complete

/usr/bin/docker-compose exec -T --user www-data app php occ fulltextsearch:test /usr/bin/docker-compose exec -T --user www-data app php occ fulltextsearch:check /usr/bin/docker-compose exec -T --user www-data app php occ fulltextsearch:index

create a

Systemd unit file/home/jobs/services/by-user/root/nextcloud-elasticsearch.service#[Unit] Requires=docker-compose.nextcloud.service Requires=network-online.target After=docker-compose.nextcloud.service After=network-online.target [Service] Type=simple WorkingDirectory=/home/jobs/scripts/by-user/root/docker/nextcloud Restart=no ExecStart=/usr/bin/bash -c '/usr/bin/docker-compose exec -T --user www-data app php occ fulltextsearch:index'

create a

Systemd timer unit file/home/jobs/services/by-user/root/nextcloud-elasticsearch.timer#[Unit] Description=Once every hour run Nextcloud's elasticsearch indexing [Timer] OnCalendar=Hourly Persistent=true [Install] WantedBy=timers.target

run the deploy script

Importante

When you update to a new Elasticsearch minor version, for example from version 7.17.7 to 7.17.8, you must recreate the index like this

systemctl stop docker-compose.nextcloud.service

/usr/bin/docker-compose run --rm elasticsearch /usr/share/elasticsearch/bin/elasticsearch-plugin remove ingest-attachment

/usr/bin/docker-compose run --rm elasticsearch /usr/share/elasticsearch/bin/elasticsearch-plugin install ingest-attachment

systemctl restart docker-compose.nextcloud.service

Importante

When you upgrade to a new Elasticsearch major version, for example from version 7 to 8, you must:

stop the Elasticsearch container

remove the existing data in the Elasticsearch volume

repeat the Elasticsearch instructions from the start, i.e.: re-test and re-index everything.

A.I. integration#

Instead of using LocalAI as self-hosted A.I. system, we are going to use Ollama. See the Open WebUI page.

Video#

Talk (a.k.a. Spreed)#

You can use metered.ca OpenRelay’s STUN and TURN servers to improve communication between WebRTC P2P clients.

Vedi anche

Free WebRTC TURN Server - Open Relay Project | Open Relay Project - Free WebRTC TURN Server [5]

Services#

Once you have your Nextcloud container running you may want to integrate these services as well

Required#

Crontab#

Vedi anche

Background jobs — Nextcloud latest Administration Manual latest documentation [4]

create another

Systemd unit filethat will run tasks periodically/home/jobs/services/by-user/root/nextcloud-cron.service#[Unit] Requires=docker-compose.nextcloud.service Requires=network-online.target After=docker-compose.nextcloud.service After=network-online.target [Service] Type=simple WorkingDirectory=/home/jobs/scripts/by-user/root/docker/nextcloud Restart=no ExecStart=/usr/bin/bash -c '/usr/bin/docker-compose exec -T --user www-data app php cron.php'

create a

Systemd timer file/home/jobs/services/by-user/root/nextcloud-cron.timer#[Unit] Description=Once every 15 minutes run Nextcloud's cron [Timer] OnCalendar=*:0/15 Persistent=true [Install] WantedBy=timers.target

run the deploy script

Optional#

File scanner#

create another

Systemd unit filethat will enable nextcloud to periodically scan for new files if added by external sources/home/jobs/services/by-user/root/scan-files-nextcloud.service#[Unit] Requires=docker-compose.nextcloud.service Requires=network-online.target After=docker-compose.nextcloud.service After=network-online.target [Service] Type=simple WorkingDirectory=/home/jobs/scripts/by-user/root/docker/nextcloud Restart=no # Scan all new files. ExecStart=/usr/bin/bash -c '/usr/bin/docker-compose exec -T --user www-data app php occ files:scan --all'

create a

Systemd timer file/home/jobs/services/by-user/root/scan-files-nextcloud.timer#[Unit] Description=Once every week scan-files-nextcloud [Timer] OnCalendar=weekly Persistent=true [Install] WantedBy=timers.target

run the deploy script

Image previews#

To have faster image load time you can use the image preview app.

Vedi anche

install the apps in Nextcloud. Go to the add apps admin menu, then to the

SearchsectionAppend these options to the main configuration file

${HTML_DATA_PATH}/config/config.php#'preview_max_x' => '4096', 'preview_max_y' => '4096', 'jpeg_quality' => '40',

restart nextcloud

systemctl restart docker-compose.nextcloud.servicego to the docker-compose directory and run these commands to set the preview sizes

cd /home/jobs/scripts/by-user/root/docker/nextcloud docker-compose exec -T --user www-data app php occ config:app:set --value="32 128" previewgenerator squareSizes docker-compose exec -T --user www-data app php occ config:app:set --value="128 256" previewgenerator widthSizes docker-compose exec -T --user www-data app php occ config:app:set --value="128" previewgenerator heightSizes

generate the previews and wait

docker-compose exec -T --user www-data app php occ preview:generate-all -vvvNota

You may get an error such as

Could not create folder. Retry scanning manually later untill it works.create a

Systemd unit file/home/jobs/services/by-user/root/nextcloud-preview.service#[Unit] Requires=docker-compose.nextcloud.service Requires=network-online.target After=docker-compose.nextcloud.service After=network-online.target [Service] Type=simple WorkingDirectory=/home/jobs/scripts/by-user/root/docker/nextcloud Restart=no ExecStart=/usr/bin/bash -c '/usr/bin/docker-compose exec -T --user www-data app php occ preview:generate-all'

create a

Systemd timer unit file/home/jobs/services/by-user/root/nextcloud-preview.timer#[Unit] Description=Run Nextcloud's image preview generation daily [Timer] OnCalendar=*-*-* 04:00:00 Persistent=true [Install] WantedBy=timers.target

run the deploy script

Nota

If you need to regenerate or delete the previews you must follow the steps reported in the repository’s readme [13].

Export#

You can export all calendars, contacts and news sources using this script. This is useful if your do not have the possibility to make a full backup and in case something goes wrong during an update to your Nextcloud instance.

install the dependencies

apt-get install python3-yamlcreate a new user

useradd --system -s /bin/bash -U nextcloud passwd nextcloud usermod -aG jobs nextcloud

create the jobs directories. See reference

mkdir -p /home/jobs/{scripts,services}/by-user/nextcloudcreate the

script/home/jobs/scripts/by-user/nextcloud/nextcloud_export.py##!/usr/bin/env python3 # # nextcloud_export.py # # Copyright (C) 2023 Franco Masotti (franco \D\o\T masotti {-A-T-} tutanota \D\o\T com) # # This program is free software: you can redistribute it and/or modify # it under the terms of the GNU General Public License as published by # the Free Software Foundation, either version 3 of the License, or # (at your option) any later version. # # This program is distributed in the hope that it will be useful, # but WITHOUT ANY WARRANTY; without even the implied warranty of # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the # GNU General Public License for more details. # # You should have received a copy of the GNU General Public License # along with this program. If not, see <https://www.gnu.org/licenses/>. # import io import pathlib import re import sys import urllib.parse import urllib.request import yaml if __name__ == '__main__': configuration_file = sys.argv[1] config = yaml.load(open(configuration_file), Loader=yaml.SafeLoader) total_elements: int = len(config['element']) extension: str element_api_url: str element_error_message: str = 'This is the WebDAV interface. It can only be accessed by WebDAV clients such as the Nextcloud desktop sync client.' for i, c in enumerate(config['element']): print('getting Nextcloud element (' + str(i + 1) + ' of ' + str(total_elements) + ') ' + c) if config['element'][c]['type'] == 'calendar': element_api_url = ('https://' + config['element'][c]['host'] + '/remote.php/dav/calendars/' + config['element'][c]['user'] + '/' + config['element'][c]['name'] + '?export') extension = 'vcs' elif config['element'][c]['type'] == 'address_book': element_api_url = ('https://' + config['element'][c]['host'] + '/remote.php/dav/addressbooks/users/' + config['element'][c]['user'] + '/' + config['element'][c]['name'] + '?export') extension = 'vcf' elif config['element'][c]['type'] == 'news': element_api_url = ('https://' + config['element'][c]['host'] + '/apps/news/export/opml') extension = 'opml' else: print('unsupported element: ' + config['element'][c]['type']) sys.exit(1) # Check if the computed string is a valid URL. try: element_string = urllib.parse.urlparse(element_api_url) if (element_string.scheme == '' or element_string.netloc == '' or not re.match('^http(|s)', element_string.scheme)): raise ValueError except ValueError: print('URL parse error') sys.exit(1) # Get the element using credentials. # Send credentials immediately, do not wait for the 401 error. password_manager = urllib.request.HTTPPasswordMgrWithPriorAuth() password_manager.add_password(None, element_api_url, config['element'][c]['user'], config['element'][c]['password'], is_authenticated=True) handler = urllib.request.HTTPBasicAuthHandler(password_manager) opener = urllib.request.build_opener(handler) urllib.request.install_opener(opener) try: with urllib.request.urlopen(element_api_url) as response: nc_element: io.BytesIO = response.read() if nc_element.decode('UTF-8') == element_error_message: raise ValueError except (urllib.error.URLError, ValueError) as e: print(e) print('error getting element from the Nextcloud instance at ' + element_api_url) sys.exit(1) # Save the element using the directory structure "basedir/username/element". top_directory = pathlib.Path(config['output_basedir'], config['element'][c]['user']) top_directory.mkdir(mode=0o755, parents=True, exist_ok=True) final_path = str( pathlib.Path(top_directory, config['element'][c]['name'] + '.' + extension)) with open(final_path, 'wb') as f: f.write(nc_element)

create a

configuration file/home/jobs/scripts/by-user/nextcloud/nextcloud_export.yaml#element: my_personal_calendar: # Do not include the http or https scheme. host: '${FQDN}' user: '${NC_USER}' password: '${NC_PASSWORD}' # Must already be slugged. name: 'personal' # valid values: {calendar,address_book,news} type: 'calendar' my_news_subscription: host: '${FQDN}' user: '${NC_USER}' password: '${NC_PASSWORD}' # 'name' for type 'news' can be any arbitrary value. # This will correspond to the file name, excluding the extension. name: 'news-subscription' type: 'news' my_contacts: host: '${FQDN}' user: '${NC_USER}' password: '${NC_PASSWORD}' name: 'contacts' type: 'address_book' output_basedir: '/data/NEXTCLOUD/export'

create the data directory which must be accessible by the

nextcloudusermkdir /data/NEXTCLOUD/export chmod 700 /data/NEXTCLOUD/export chown nextcloud:nextcloud /data/NEXTCLOUD/export

use this

Systemd service file/home/jobs/services/by-user/nextcloud/nextcloud-export.service#[Unit] Description=Nextcloud export elements Requires=network-online.target After=network-online.target Requires=data.mount After=data.mount Requires=docker-compose.nextcloud.service After=docker-compose.nextcloud.service [Service] Type=simple ExecStart=/home/jobs/scripts/by-user/nextcloud/nextcloud_export.py /home/jobs/scripts/by-user/nextcloud/nextcloud_export.yaml User=nextcloud Group=nextcloud

use this

Systemd timer file/home/jobs/services/by-user/nextcloud/nextcloud-export.timer#[Unit] Description=Once a day Nextcloud export elements [Timer] OnCalendar=Daily Persistent=true [Install] WantedBy=timers.target

fix the permissions

chown -R nextcloud:nextcloud /home/jobs/{scripts,services}/by-user/nextcloud chmod 700 -R /home/jobs/{scripts,services}/by-user/nextcloud

Video#

Other notes#

PhoneTrack app#

Vedi anche

Some mobile phones OSs have a bug in interpeting the correct date sent from GPS satellites. This problem is called GPS week number rollover and sets the date, but not the time, 1024 weeks in the past.

Applications like PhoneTrack should compensate for this problem. A fix was proposed but never merged in the code.

You patach the code manually in

${HTML_DATA_PATH}/custom_apps/phonetrack/lib/Controller/LogController.php

and then restart your Nextcloud instance.

Importante

When the PhoneTrack app updates you need to remember to repeat this procedure.

Recognize app#

Important information before using the Recognize for Nextcloud app.

Vedi anche

GitHub - nextcloud/recognize: Smart media tagging for Nextcloud: recognizes faces, objects, landscapes, music genres [19]

Error when deleting file or folder - General - Nextcloud community [20]

[Bug]: Call to undefined method OCPFilesEventsNodeNodeDeletedEvent::getSubject() on deltetion of files · Issue #33865 · nextcloud/server · GitHub [21]

[3.2.2] Background scan blocks Nextcloud CRON php job indefinetly with 100% CPU load · Issue #505 · nextcloud/recognize · GitHub [22]

Video#

Memories app#

Once the Memories app is installed or when you added external files you must run a reindexing operation

Vedi anche

GitHub - pulsejet/memories: Fast, modern and advanced photo management suite. Runs as a Nextcloud app. [23]

docker-compose exec -T --user www-data app php occ memories:index

News app#

Improve performance#

Opcache#

You can improve caching by changing options in the opcache-recommended.ini file.

Vedi anche

create the directory

mkdir /home/jobs/scripts/by-user/root/docker/nextcloud/phpcfg pushd /home/jobs/scripts/by-user/root/docker/nextcloud/phpcfg

create a

configuration file/home/jobs/scripts/by-user/root/docker/nextcloud/phpcfg/opcache-recommended.ini#opcache.enable=1 opcache.interned_strings_buffer=512 opcache.max_accelerated_files=65407 opcache.memory_consumption=2048 opcache.save_comments=1 opcache.revalidate_freq=80

add the docker volume in the docker-compose file

/home/jobs/scripts/by-user/root/nextcloud/docker-compose.yml## [ ... ] volumes: # [ ... ] - /home/jobs/scripts/by-user/root/docker/nextcloud/phpcfg/opcache-recommended.ini:/usr/local/etc/php/conf.d/opcache-recommended.ini # [ ... ] # [ ... ]

run the deploy script

Transactional file locking#

Vedi anche

Transactional file locking — Nextcloud latest Administration Manual latest documentation [29]

Footnotes